Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy. Learn more

While large language models (LLMs) have mastered text (and other modalities to some extent), they lack the physical “common sense” to operate in dynamic, real-world environments. This has limited the deployment of AI in areas like manufacturing and logistics, where understanding cause and effect is critical.

Meta’s latest model, V-JEPA 2, takes a step toward bridging this gap by learning a world model from video and physical interactions.

V-JEPA 2 can help create AI applications that require predicting outcomes and planning actions in unpredictable environments with many edge cases. This approach can provide a clear path toward more capable robots and advanced automation in physical environments.

How a ‘world model’ learns to plan

Humans develop physical intuition early in life by observing their surroundings. If you see a ball thrown, you instinctively know its trajectory and can predict where it will land. V-JEPA 2 learns a similar “world model,” which is an AI system’s internal simulation of how the physical world operates.

model is built on three core capabilities that are essential for enterprise applications: understanding what is happening in a scene, predicting how the scene will change based on an action, and planning a sequence of actions to achieve a specific goal. As Meta states in its blog, its “long-term vision is that world models will enable AI agents to plan and reason in the physical world.”

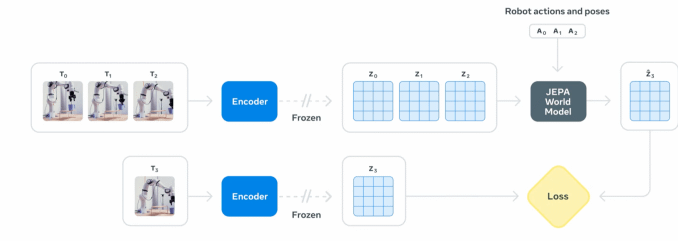

The model’s architecture, called the Video Joint Embedding Predictive Architecture (V-JEPA), consists of two key parts. An “encoder” watches a video clip and condenses it into a compact numerical summary, known as an embedding. This embedding captures the essential information about the objects and their relationships in the scene. A second component, the “predictor,” then takes this summary and imagines how the scene will evolve, generating a prediction of what the next summary will look like.

This architecture is the latest evolution of the JEPA framework, which was first applied to images with I-JEPA and now advances to video, demonstrating a consistent approach to building world models.

Unlike generative AI models that try to predict the exact color of every pixel in a future frame — a computationally intensive task — V-JEPA 2 operates in an abstract space. It focuses on predicting the high-level features of a scene, such as an object’s position and trajectory, rather than its texture or background details, making it far more efficient than other larger models at just 1.2 billion parameters

That translates to lower compute costs and makes it more suitable for deployment in real-world settings.

Learning from observation and action

V-JEPA 2 is trained in two stages. First, it builds its foundational understanding of physics through self-supervised learning, watching over one million hours of unlabeled internet videos. By simply observing how objects move and interact, it develops a general-purpose world model without any human guidance.

In the second stage, this pre-trained model is fine-tuned on a small, specialized dataset. By processing just 62 hours of video showing a robot performing tasks, along with the corresponding control commands, V-JEPA 2 learns to connect specific actions to their physical outcomes. This results in a model that can plan and control actions in the real world.

This two-stage training enables a critical capability for real-world automation: zero-shot robot planning. A robot powered by V-JEPA 2 can be deployed in a new environment and successfully manipulate objects it has never encountered before, without needing to be retrained for that specific setting.

This is a significant advance over previous models that required training data from the exact robot and environment where they would operate. The model was trained on an open-source dataset and then successfully deployed on different robots in Meta’s labs.

For example, to complete a task like picking up an object, the robot is given a goal image of the desired outcome. It then uses the V-JEPA 2 predictor to internally simulate a range of possible next moves. It scores each imagined action based on how close it gets to the goal, executes the top-rated action, and repeats the process until the task is complete.

Using this method, the model achieved success rates between 65% and 80% on pick-and-place tasks with unfamiliar objects in new settings.

Real-world impact of physical reasoning

This ability to plan and act in novel situations has direct implications for business operations. In logistics and manufacturing, it allows for more adaptable robots that can handle variations in products and warehouse layouts without extensive reprogramming. This can be especially useful as companies are exploring the deployment of humanoid robots in factories and assembly lines.

The same world model can power highly realistic digital twins, allowing companies to simulate new processes or train other AIs in a physically accurate virtual environment. In industrial settings, a model could monitor video feeds of machinery and, based on its learned understanding of physics, predict safety issues and failures before they happen.

This research is a key step toward what Meta calls “advanced machine intelligence (AMI),” where AI systems can “learn about the world as humans do, plan how to execute unfamiliar tasks, and efficiently adapt to the ever-changing world around us.”

Meta has released the model and its training code and hopes to “build a broad community around this research, driving progress toward our ultimate goal of developing world models that can transform the way AI interacts with the physical world.”

What it means for enterprise technical decision-makers

V-JEPA 2 moves robotics closer to the software-defined model that cloud teams already recognize: pre-train once, deploy anywhere. Because the model learns general physics from public video and only needs a few dozen hours of task-specific footage, enterprises can slash the data-collection cycle that typically drags down pilot projects. In practical terms, you can prototype a pick-and-place robot on an affordable desktop arm, then roll the same policy onto an industrial rig on the factory floor without gathering thousands of fresh samples or writing custom motion scripts.

Lower training overhead also reshapes the cost equation. At 1.2 billion parameters, V-JEPA 2 fits comfortably on a single high-end GPU, and its abstract prediction targets reduce inference load further. That lets teams run closed-loop control on-prem or at the edge, avoiding cloud latency and the compliance headaches that come with streaming video outside the plant. Budget that once went to massive compute clusters can fund extra sensors, redundancy, or faster iteration cycles instead.

Be the first to comment