OpenAI has announced a set of targeted updates to its AI agent development stack, aimed at expanding platform compatibility, improving support for voice interfaces, and enhancing observability. These updates reflect a consistent progression toward building practical, controllable, and auditable AI agents that can be integrated into real-world applications across client and server environments.

1. TypeScript Support for the Agents SDK

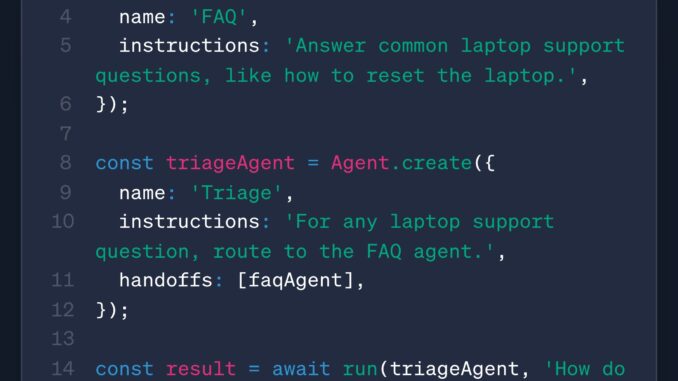

OpenAI’s Agents SDK is now available in TypeScript, extending the existing Python implementation to developers working in JavaScript and Node.js environments. The TypeScript SDK provides parity with the Python version, including foundational components such as:

Handoffs: Mechanisms to route execution to other agents or processes.

Guardrails: Runtime checks that constrain tool behavior to defined boundaries.

Tracing: Hooks for collecting structured telemetry during agent execution.

MCP (Model Context Protocol): Protocols for passing contextual state between agent steps and tool calls.

This addition brings the SDK into alignment with modern web and cloud-native application stacks. Developers can now build and deploy agents across both frontend (browser) and backend (Node.js) contexts using a unified set of abstractions. The open documentation is available at openai-agents-js.

2. RealtimeAgent with Human-in-the-Loop Capabilities

OpenAI introduced a new RealtimeAgent abstraction to support latency-sensitive voice applications. RealtimeAgents extend the Agents SDK with audio input/output, stateful interactions, and interruption handling.

One of the more substantial features is human-in-the-loop (HITL) approval, allowing developers to intercept an agent’s execution at runtime, serialize its state, and require manual confirmation before continuing. This is especially relevant for applications requiring oversight, compliance checkpoints, or domain-specific validation during tool execution.

Developers can pause execution, inspect the serialized state, and resume the agent with full context retention. The workflow is described in detail in OpenAI’s HITL documentation.

3. Traceability for Realtime API Sessions

Complementing the RealtimeAgent feature, OpenAI has expanded the Traces dashboard to include support for voice agent sessions. Tracing now covers full Realtime API sessions—whether initiated via the SDK or directly through API calls.

The Traces interface allows visualization of:

Audio inputs and outputs (streamed or buffered)

Tool invocations and parameters

User interruptions and agent resumptions

This provides a consistent audit trail for both text-based and audio-first agents, simplifying debugging, quality assurance, and performance tuning across modalities. The trace format is standardized and integrates with OpenAI’s broader monitoring stack, offering visibility without requiring additional instrumentation.

Further implementation details are available in the voice agent guide at openai-agents-js/guides/voice-agents.

4. Refinements to the Speech-to-Speech Pipeline

OpenAI has also made updates to its underlying speech-to-speech model, which powers real-time audio interactions. Enhancements focus on reducing latency, improving naturalness, and handling interruptions more effectively.

While the model’s core capabilities—speech recognition, synthesis, and real-time feedback—remain in place, the refinements offer better alignment for dialog systems where responsiveness and tone variation are essential. This includes:

Lower latency streaming: More immediate turn-taking in spoken conversations.

Expressive audio generation: Improved intonation and pause modeling.

Robustness to interruptions: Agents can respond gracefully to overlapping input.

These changes align with OpenAI’s broader efforts to support embodied and conversational agents that function in dynamic, multimodal contexts.

Conclusion

Together, these four updates strengthen the foundation for building voice-enabled, traceable, and developer-friendly AI agents. By providing deeper integrations with TypeScript environments, introducing structured control points in real-time flows, and enhancing observability and speech interaction quality, OpenAI continues to move toward a more modular and interoperable agent ecosystem.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Be the first to comment